This week we are talking about size, which is a subject that should matter to any system administrator in charge of the backup system of any project, and in particular database backups.

I sometimes get questions about what should be the best compression tool to apply during a particular backup system: gzip? bzip2? any other?

The testing environment

In order to test several formats and tools, I created a .csv file (comma-separated values) that was 3,700,635,579 bytes in size by transforming a recent dump of all the OpenStreetMap nodes of the European portion of Spain. It had a total of 46,741,126 rows and looked like this:

171773 38.6048402 -0.0489871 4 2012-08-25 00:37:46 12850816 472193 rubensd 171774 38.6061981 -0.0496867 2 2008-01-19 10:23:21 666916 9250 j3m 171775 38.6067166 -0.0498342 2 2008-01-19 10:23:21 666916 9250 j3m 171776 38.6028122 -0.0497136 5 2012-08-25 00:26:40 12850816 472193 rubensd 171933 40.4200658 -3.7016652 6 2011-11-29 05:37:42 9984625 33103 sergionaranja

In fact, the original file is really a tsv (tab-separated values), not a csv, but only because I am lazy when importing/exporting to MySQL to add the extra FIELDS SEPARATED BY ','. You can download this file in 7z format, or create your own from the Geofabrik OpenStreetMap Data Extracts.

All tests were done on an almost-idle Intel Quad Core i7-3770@3.40GHz with hyper threading, exposing 8 cpus to the kernel. 32 GB of ram. 2 spinning disks of 3 TB in RAID 1. All running on CentOS 6.5 x86_64. The filesystem type was ext4 with the default OS options.

On-table sizes

For an import to MySQL, I proposed the following table structure:

CREATE TABLE `nodes` ( `id` bigint PRIMARY KEY, `lat` decimal(9,7), `lon` decimal(10,7), `version` int, `timestamp` timestamp, `changeset` bigint, `uid` bigint, `user` varchar(255) );

And these are the sizes on database (once we made sure there were no pending write operations):

- MySQL MyISAM data file (.MYD): 2,755,256,596 bytes.(*)

- MySQL InnoDB tablespace (.ibd): 3,686,793,216 bytes.

- MySQL InnoDB tablespace using

row_format=compressed(.ibd): 1,736,441,856 bytes.

Why is it taking more space on plain text than on the database? Well, despite databases being optimised for fast access and not for space, as we are using very compact set of datatypes (integers and timestamps instead of strings), actually saving disk space. This is why a proper database design is critical for performance!

We can see that one of the few reason why people are still using MyISAM is because it is a very simple and compact format. (*)However, to be fair, we are not having into account the extra 674,940,928 bytes for the primary key (.MYI), making the difference not so big. On the other side, we are not taking into account that InnoDB index size goes up quite significantly when using multiple secondary keys (due to the storage of the primary key, if it is large enough) and the many other structures (tablespace 0, transaction logs) that are needed for InnoDB to work properly, shared with other tables. In general, it is impossible to do a fair comparison between MyISAM and InnoDB because we are comparing apples and oranges.

What it is clear is that compression (in this case I used the default InnoDB zlib algorithm with the default level of compression-6) helps reduce on-disk size, potentially helping for some specific scenarios: more tables to fit in SSDs, or less IOPS for a disk-bound database. On the other side, the initial load from a file took significantly more. I do not want to show time measurements for the different table imports because it is not trivial to account the actual time to disk due to all the buffering occurring at database level, and giving the time of SQL execution would be unfair. I talk more about import times in this post.

Global results

The sizes in table are only showed as reference, our main goal was to test the several tools available for compressing the original nodes.csv file. I constrained myself to some of the most popular ones, and you can see the final results on the following table (analysis, explanation and discussion or results follows afterwards):

Original size 3700635579 bytes

method median compression compressed size compression ratio compression cpu

time (seconds) (bytes) (new_size/original_size) usage (unix %CPU)

dd 10.146 3700635579 100.00% 97 - 68

gzip 113.796 614119104 16.59% 100 - 89

gzip -1 43.219 729259339 19.71% 100 - 67

gzip -9 266.991 585777285 15.83% 97 - 77

bzip2 294.568 525839069 14.21% 95 - 89

bzip2 -1 281.337 508276130 13.73% 100 - 80

bzip2 -9 295.510 585777285 15.83% 100 - 95

pigz 27.325 614093952 16.59% 770 - 547

pigz -1 25.982 728206796 19.68% 231 - 159

pigz -9 51.821 585756379 15.83% 773 - 659

pbzip2 74.874 526311578 14.22% 794 - 663

pbzip2 -1 60.487 508782016 13.75% 800 - 495

pbzip2 -9* 76.597 526311578 14.22% 773 - 394

lzip 2138.230 357788948 9.67% 100 - 70

7za 833.611 380728938 10.29% 172 - 145

7za "ultra" 1286.044 354107250 9.57% 178 - 164

plzip 216.942 376484712 10.17% 768 - 373

plzip -1 50.151 581552529 15.71% 781 - 738

plzip "ultra" 426.486 354095395 9.57% 785 - 159

lzop 15.505 1003908508 27.13% 95 - 50

lzop -1 13.080 1000938935 27.05% 90 - 63

lzop -9 487.850 782234410 21.14% 99 - 89

lz4 8.537 1043868501 28.21% 93 - 65

lz4 -1* 8.574 1043868501 28.21% 94 - 65

lz4 -9 96.171 816582329 22.07% 99 - 66

As you can see, I evaluated several tools on their default modes, plus additionally “high-compression mode” and a “fast mode”. For them, I tried to evaluate 3 different parameters important for the creation of compressed files: time to completion, final file size and resources used. Please note that I only evaluated compression tools, and not archiving ones (like tar or zip). The latter tools can usually use different algorithms for compressing each file individually or the final full archive.

The first data column shows the number of seconds of wall clock time that took for the process to write the compressed file to a different partition on the same set of RAID disks. Several runs of:

time [application] -c < nodes.csv > /output/nodes.csv.[format]

were executed (except for 7z and dd, where the syntax is different) and the median vale was taken, in order to minimise measure errors due to external factors. For each run, the final file was checked for correctness (the compressed file is deterministic and it can be extracted into the original file without errors or differences) and then deleted. The results are not too scientific, as the filesystem cache can be heavily used for both reads and writes, but I tried to focus on that scenario in particular. An execution of dd (copying the file without compression) is also shown as a control value.

I think the second and third data columns are self-explanatory: the file size, in bytes, of the compressed file and how it compares with the original file.

The last column tries to measure the max and min CPU usage, as reported by the operating system during compression. However, due to the cpu scheduler, and the fact that most tools have a synchronisation period at the beginning and at end of the execution, together with the fact that is was obtained by polling its value at intervals, it is not very significative except for checking the parallelism of the algorithm used. Values greater than 100 means that more than core/thread is being used for compression.

I did not registered the memory usage (the other important resource) because even on ultra modes, its usage was not significative for my 32 GB machine (less than 1/2 GB every time, most of the times much less). I considered it was something one should not worry too much for a machine that should have enough free RAM like a database server. What you probably would like to have into account is the effects on the filesystem cache, as that could impact directly on the MySQL performance. Preventing backup page reads and writes going into the filesystem cache can be done playing around with the flag POSIX_FADV_DONTNEED. I want to mention also that there are tools, like bzip, that have a small footprint mode: bzip2 --small.

You can find the measures concerning decompression times on a followup post: Part II.

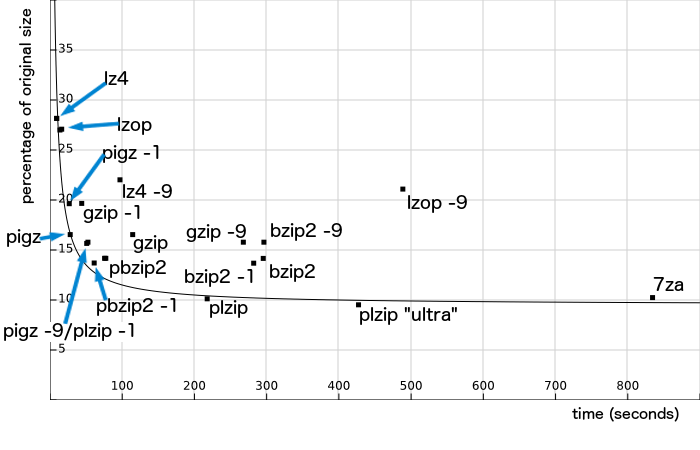

The global results may be appreciated much more clearly plotted on a bidimensional graph. I have plotted the obtained values with the time to compression on the X axis (lower is better) and the compression ratio on the Y axis (lower is better):

Not plotted: dd (100% compression ratio), 7za “ultra” (>21 minutes for compression) and lzip (>35 minutes for compression).

In general, we can see that there are no magical tools, and that a better compression ratio requires more time (size is inversely proportional to time). I have plotted also the function y = 200/x + 9. That, or something like y = 200/x+9.5(it is difficult to provide a good correlation with so little number of matches, most of them unrelated) seems to provide the lower limit of ratio per unit of time, suggesting that 9%-9.5% would be the maximum compress ration obtainable for that file with the available tools at the moment.

Let’s analyse what are the weaknesses and strong points of every compression format.

The well-known gzip and bzip2

If you want compatibility, gzip and bzip2 are the kings. Not only they are widely recognised compression formats, but the tools for compress and decompress are preinstalled in most unix-like operating systems. Probably Windows is the only operating system that doesn’t support gzip by default. gzip and bzip2 are the only compressions with its own letter on tar (with compress on BSD and xz on GNU).

Compatibility and availability are the strong points of these tools, however, if we look at the graph, we can see that they are relatively far from the line I mentioned as “ideal” in time/size ratio. bzip2 provides a better compression ratio than gzip in exchange of more cpu cycles, but both tools are single-threaded and they do not shine in any aspect. Surprisingly enough, bzip2 -1 provided me with a worse compression time and better rate than standard bzip2 execution, and the manual for the gnu version provides an explanation for that:

The --fast and --best aliases are primarily for GNU gzip compatibility. In particular, --fast doesn't make things significantly faster. And --best merely selects the default behaviour.

Probably the best use I would recommend for this tools is gzip --fast (equivalent to gzip -1) that, while not providing a great compression rate, it does it in a very fast way even for a single-thread application. So it can be useful in those cases where we want to maximise speed without taking many resources. In other cases, where tool availability is not a problem, I would recommend you trying other tools with either better speed or better compression ratio.

I used GNU versions gzip 1.3.12 and bzip2 1.0.6.

Parallel compression tools: pigz and pbzip2

Things get more interesting if you use the parallel versions of gzip and bzip2 on a multi-core system. While there are more than one version, I choose pigz 2.3.1 and pbzip2 1.1.6 for my tests. While they are not part of the official Red Hat/CentOS repositories, they can found on EPEL and Debian repositories.

Both tools auto-detect the number of cores I had and performed the compression in 8 threads, providing comparable compression ratios in about 4 times less time. The obvious downsize is that in a high-demanding environment, like a MySQL server under considerable load, you may not want/can’t provide full CPU resources to the backup process. But if you are doing the compression on a separated dedicated server, parallelization is something you should get advantage of, as in general CPU will be the main bottleneck on a compression algorithm.

Again, as a highlight, pigz with the default parameters provided me a good compression ration (16,89%) in less than 28 seconds- that is compressing at close to 130MB/s for my modest hardware (that is more than a third of my copy rate, 350MB/s).

As a side note, while pbzip2 accepts a compression level as a parameter, the default compression level is -9.

lzma implementations: lzip, 7zip and plzip

The next tests performed were simply different lzma implementations, an algorithm that has the fame of providing very good compression rates.

I started with lzip. It is not on the official repositories, so I got it from EPEL, installing lzip 1.7. The compression ratio was, effectively, the best of all other algorithms (close to 9.5%) but it took 35 minutes and 38 seconds to produce the output. Not only the algorithm was to blame: it used a single thread, hence the delay.

After that, I tried p7zip 9.20, in particular the unix tool 7za. This one the only tool tested that did not conformed to the gzip parameters. I had to execute it using:

time 7za a /output/nodes.csv.7z nodes.csv

Please note that p7zip is an archiver tool, but I made an exception in order to test an alternative implementation of lzma.

The results were better: while the tool provided a slightly worse compression ration (10.29%), thanks to some kind of execution in more than one thread, the execution time was reduced to just under 14 minutes. I also tested a suggested “ultra” mode found in the 7za manual, with the following parameters:

-t7z -m0=lzma -mx=9 -mfb=64 -md=32m -ms=on

In short: maximising the memory usage, compression level and dictionary size -aside from enforcing the archive format and compression algorithm. While this provided with a smaller file size (but only 25 MB smaller, less than a 1% of the original file), the time went up to more than 21 minutes.

I wanted to try a real parallel implementation of lzma, and plzip was exactly that. I could not find a rpm package anywhere, so I downloaded and installed from source code Lzlib 1.5 and plzip 1.2-rc2. The results were really good, as expected. plzip provided comparable results to “pigz -9” when running in “fast mode”; but by default, in only 3m37s I got a 359MB compressed file, or 10.17% of the original file. I then tried to emulate the ultra settings of p7zip (with -9 -m 64 -s 33554432) and got the winner tool in compression ratio (9.57%) in only 7 minutes and 6.486 seconds.

Obviously, the same restrictions that I mentioned for the other parallel tools apply here: usage of multiple cpus may be discuraged for a very busy server, but if you are storing the backups for long-term on a separate server, you may want to have a look at this possibility. In any case, most parallel tools have a way to limit the number of threads created (for example with the --threads option in lzip).

Fast compression tools: lzop and lz4

I didn’t want to finish my testing without having a look some of the high-bandwidth compression tools, and I choose 2: lzop and lz4. While I had to install lz4 r119 from EPEL, lzop v1.02rc1 is part of the base packages of Red Hat/CentOS.

They both provide what they promise: very fast compression algorithms (in some cases, faster than a plain copy of a file, as they are not CPU-bound but they have to write less amount of data) in exchange for worse compression ratios (21-30%). For the example file, on my machine, I got better performance for lz4 than lzop, offering similar ratios but in less time (8.5 vs. 15.5 seconds). So if I had to choose, I would probably would use lz4 over lzop in my particular case. Additionally, although it has not been tested, lz4 boasts of having better decompression speeds.

As a negative highlight, I would recommend against using lzop -9, as there are tools that could get you better compression ratios in half the time. lz4 did not perform well also with a higher compression level, so I recommend you to stick to the defaults or lower compression levels for these tools (in fact, lz4 defaults to -1).

Conclusion

I didn’t test other tools like compress (Lempel-Ziv), xz (lzma2) or QuickLZ, but I do not expect too many deviations from the patterns we have seen: time needed is inversely proportional to compression level. If you want fast compression times, go for lz4. If you want a small file size, go for an implementation of lzma, like p7zip. bzip and gzip formats are good options when compatibility is important (e.g. releasing a file), but when possible, use a parallel compress implementation to improve its performance (plzip, pbzip2, pigz). We can even use a combination of tools for our backups, for example, export our tables in binary format using lz4 to get them outside of the mysql server, and later, on a separate server, convert it to lzma for long-term storage.

I would also tell you to try the compression methods for your particular dataset and hardware, as you may get different compression ratios and timings, specially depending on the amount of memory available for filesystem caching, your cpu(s) and your read and write rate from secondary storage. What I have tried to do with this, however, is a starting point for you to get your own conclusions.

Do you agree with me? Do you think I am wrong at some point? Did you miss something? Write a comment or send me a replay on twitter or by email.

Check out Part II of this analysis for my research on decompression times.

Pingback:Which Compression Tool Should I Use for my Database Backups (Part II: Decompression) | MySQL DBA for Hire

Pingback:Testing the Fastest Way to Import a Table into MySQL (and some interesting 5.7 performance results) | MySQL DBA for Hire

This seems to be missing

xzin the comparison. It is replacing uses ofbzip2more and more, recently.